- About Us

-

-

- About UsWe are the regional leaders in cybersecurity. We are masters in our tradecraft. We are dynamic, talented, customer-centric, laser-focused and our mission is to defend and protect our customers from cyber adversaries through advisory, consulting, engineering, and operational services.

-

-

- Solutions

-

-

- SolutionsThe cybersecurity industry is fragmented. We have carefully curated an interoperable suite of cybersecurity products and solutions that focus on improving your security compliance and risk maturity that add real business value, effectiveness, and ROI. Combined with our professional services and security engineering expertise we design, architect, implement and operate complex environments and protect your digital space.

-

- Industry

-

-

- IndustryCyber adversaries and threat actors have no boundaries. No industry is immune to cyber-attacks. Each industry has unique attributes and requirements. At DTS we have served all industry verticals since inception and have built specialization in each segment; to ensure our customers can operate with a high degree of confidence and assurance giving them a competitive advantage.

-

- Services

-

-

- ServicesOur cybersecurity services are unmatched in the region. With our unique customer-centric approach and methodology of SSORR we provide end-to-end strategic and tactical services in cybersecurity. We on-ramp, develop, nurture, build, enhance, operationalize, inject confidence, and empower our customers.

-

-

- Vendors

- Products

- Resources

- Press Center

- Tweets

- Support

- Contact

-

Generative AI Cybersecurity

Secure Your GenAI Use Cases with S3CURE/AI

DTS Solution recognizes that Generative Artificial Intelligence (GenAI) is revolutionizing the way industries operate, pushing the boundaries of innovation and efficiency.

As businesses increasingly adopt Large Language Models (LLMs), integrating them into their products and services, the importance of robust cybersecurity and governance measures becomes paramount.

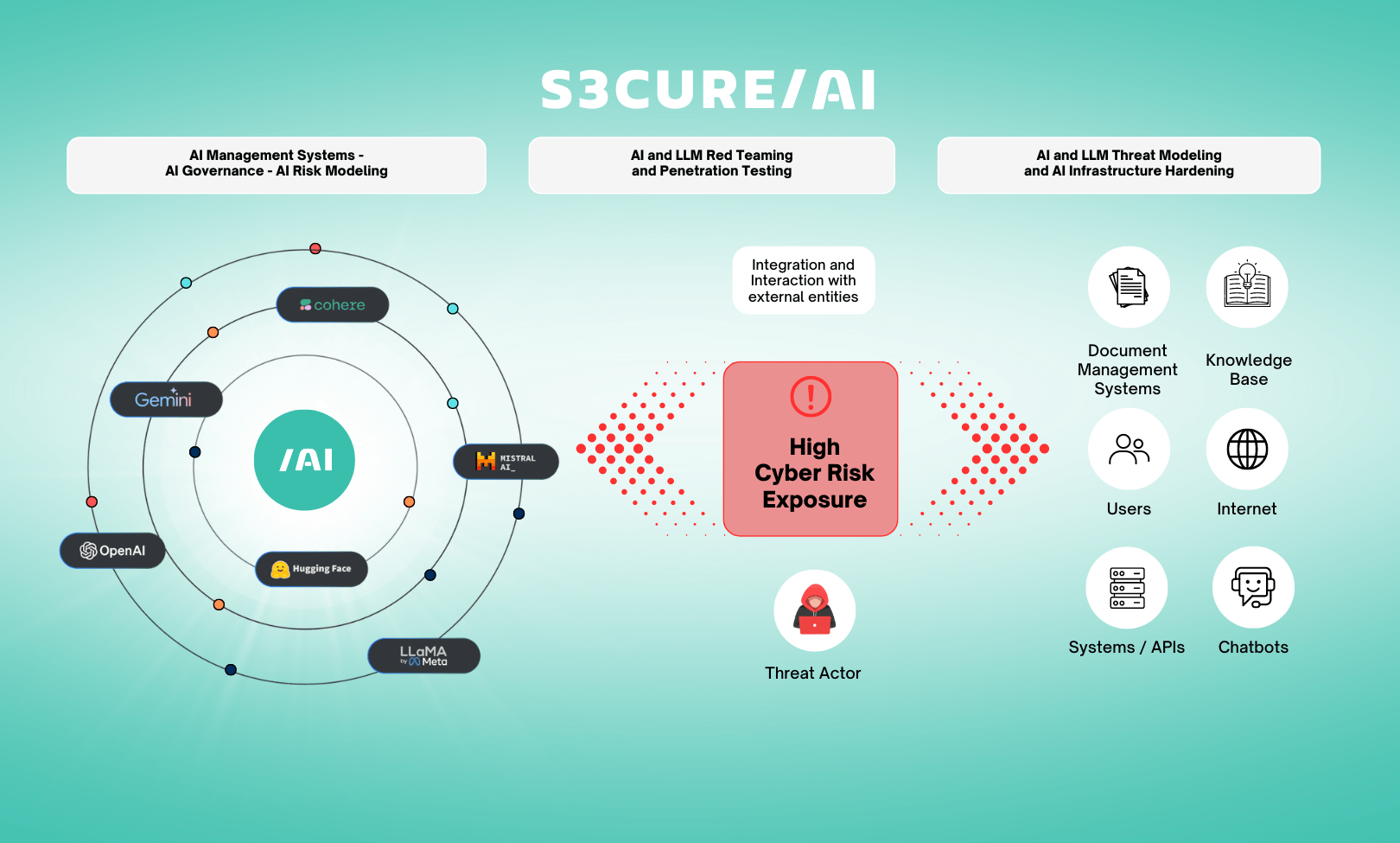

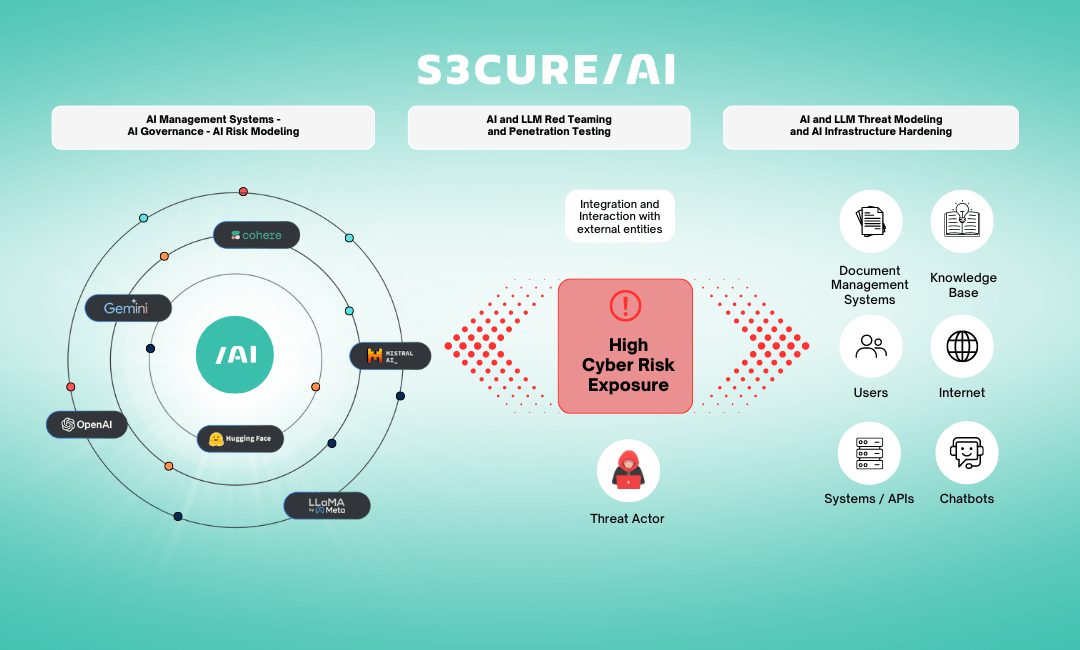

With our S3CURE/AI initiative, DTS Solution leads the way in securing AI-driven technologies. We understand that the primary cybersecurity risks often stem from the integration of AI models into existing systems and workflows, not merely from the AI technologies themselves.

S3CURE/AI provides a strategic framework to ensure your GenAI adoption is secured against cyber threats.

By focusing on comprehensive AI governance, precise risk modeling, and rigorous security testing with LLM red teaming and penetration testing, and AI validation with threat modeling, and infrastructure hardening, S3CURE/AI empowers your organization to leverage GenAI innovations securely and confidently.

Neglecting these risks can expose your organization and customers to significant threats, including data leaks, unauthorized access, and non-compliance with regulations.

With S3CURE/AI, we help mitigate the real-world risks of integrating GenAI into your enterprise systems and workflows.

Backed by our expertise as a leading cybersecurity firm, we offer a comprehensive approach to securing AI-driven technologies, ensuring your organization’s safe and compliant adoption of GenAI and LLMs.

Secure Your GenAI Use Cases with S3CURE/AI

DTS Solution recognizes that Generative Artificial Intelligence (GenAI) is revolutionizing the way industries operate, pushing the boundaries of innovation and efficiency. As businesses increasingly adopt Large Language Models (LLMs), integrating them into their products and services, the importance of robust cybersecurity and governance measures becomes paramount.

With our S3CURE/AI initiative, DTS Solution leads the way in securing AI-driven technologies. We understand that the primary cybersecurity risks often stem from the integration of AI models into existing systems and workflows, not merely from the AI technologies themselves.

S3CURE/AI provides a strategic framework to ensure your GenAI adoption is secured against cyber threats. By focusing on comprehensive AI governance, precise risk modeling, and rigorous security testing with LLM red teaming and penetration testing, and AI validation with threat modeling, and infrastructure hardening, S3CURE/AI empowers your organization to leverage GenAI innovations securely and confidently.

Neglecting these risks can expose your organization and customers to significant threats, including data leaks, unauthorized access, and non-compliance with regulations.

With S3CURE/AI, we help mitigate the real-world risks of integrating GenAI into your enterprise systems and workflows. Backed by our expertise as a leading cybersecurity firm, we offer a comprehensive approach to securing AI-driven technologies, ensuring your organization’s safe and compliant adoption of GenAI and LLMs.

Guarding against GenAI Threats

Prompt Injection and Jailbreaking

Uncontrolled Autonomy and Malicious Use

Inference

Attacks

Weak Tool or

Plugin Security

Inadequate Monitoring, Logging, and Rate Limiting

Improper Output

Validation

Guarding against GenAI Threats

Prompt Injection and Jailbreaking

Uncontrolled Autonomy and Malicious Use

Inference Attacks

Weak Tool or Plugin Security

Inadequate Monitoring, Logging, and Rate Limiting

Improper Output Validation

Guarding against GenAI Threats

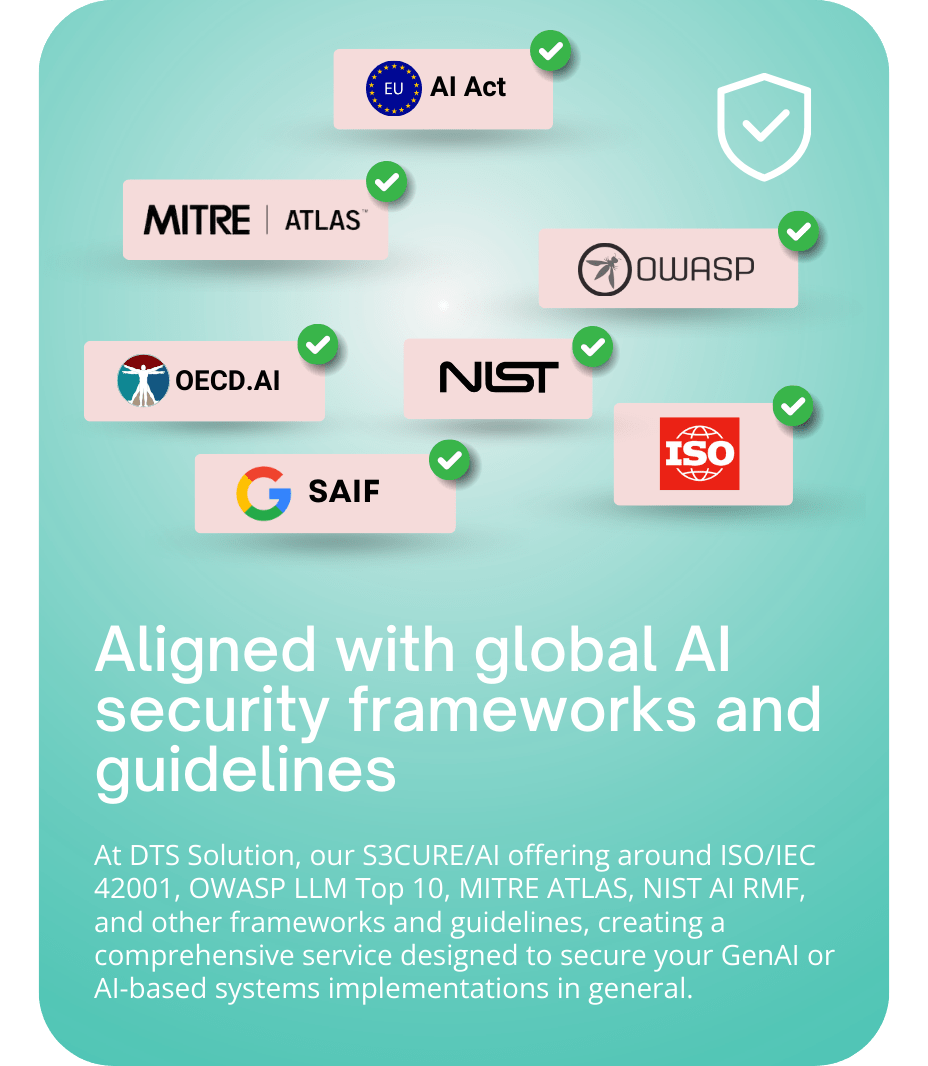

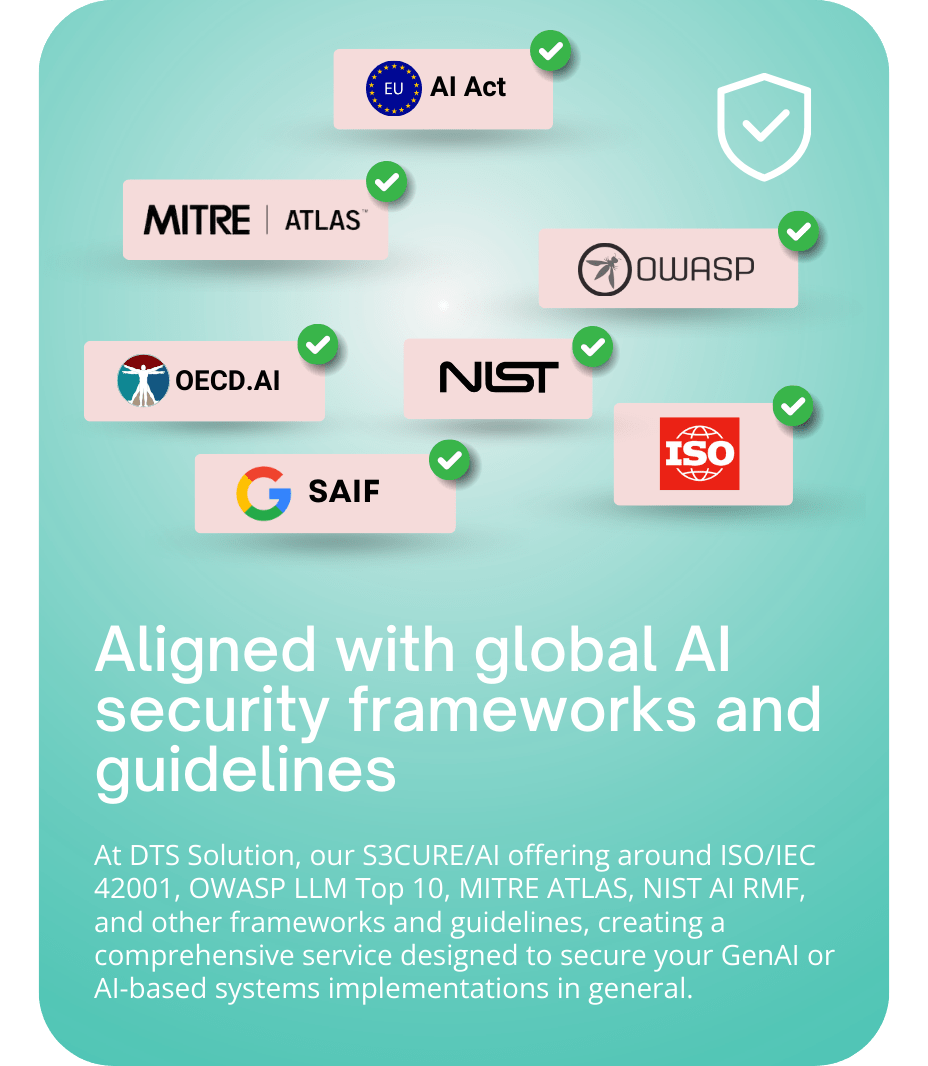

At DTS Solution, our S3CURE/AI offering around ISO/IEC 42001, OWASP LLM Top 10, MITRE ATLAS, and the NIST AI RMF framework and guidelines, creating a comprehensive service designed to secure your GenAI implementations.

S3CURE/AI integrates best practices from these standards to provide a robust, end-to-end solution that addresses the unique security challenges of GenAI. From governance and risk management to penetration testing and infrastructure hardening, S3CURE/AI ensures your AI innovations remain secure and compliant.

Safeguard Your LLMs and GenAI Use Cases

Whether your organization is just beginning to explore GenAI-powered solutions or has already integrated custom deployments, our consultants are here to help you identify and mitigate potential cybersecurity risks at every phase.

We assist in the secure adoption and integration of AI by thoroughly assessing potential security vulnerabilities in your GenAI/LLM interactions and system workflows, providing actionable recommendations for secure deployment.

Based on your specific needs, our assessment approaches can include various tailored methods to ensure maximum protection.

Contact us today to find the best approach for your organization.

Guarding against GenAI Threats

At DTS Solution, our S3CURE/AI offering around ISO/IEC 42001, OWASP LLM Top 10, MITRE ATLAS, and the NIST AI RMF framework and guidelines, creating a comprehensive service designed to secure your GenAI implementations.

S3CURE/AI integrates best practices from these standards to provide a robust, end-to-end solution that addresses the unique security challenges of GenAI. From governance and risk management to penetration testing and infrastructure hardening, S3CURE/AI ensures your AI innovations remain secure and compliant.

Safeguard Your LLMs and GenAI Use Cases

Whether your organization is just beginning to explore GenAI-powered solutions or has already integrated custom deployments, our consultants are here to help you identify and mitigate potential cybersecurity risks at every phase.

We assist in the secure adoption and integration of AI by thoroughly assessing potential security vulnerabilities in your GenAI/LLM interactions and system workflows, providing actionable recommendations for secure deployment.

Based on your specific needs, our assessment approaches can include various tailored methods to ensure maximum protection.

Contact us today to find the best approach for your organization.

Safeguarding AI with S3CURE/AI

S1 - AI Governance Management Systems (AIMS)

Governance, risk management, and lifecycle control for AI systems.

AI Governance Policies

AI Cyber Risk Modeling and Assessment

AI Adversarial Defense Blueprint

S2 - AI and LLM Red Teaming & PenTesting

Offensive security testing to identify vulnerabilities in AI models and systems.

AI/LLM Red Team Simulations

Penetration Testing for AI/LLM systems

S3 - AI / LLM Threat Modeling and Infrastructure Hardening

Anticipating threats and reinforcing AI infrastructure.

AI Threat Modeling Assessment

AI Infrastructure Security Hardening

Safeguarding AI with S3CURE/AI

S1 - AI Governance Management Systems (AIMS)

Governance, risk management, and lifecycle control for AI systems.

AI Governance Policies

AI Cyber Risk Modeling and Assessment

AI Adversarial Defense Blueprint

S2 - AI and LLM Red Teaming & PenTesting

Offensive security testing to identify vulnerabilities in AI models and systems.

AI/LLM Red Team Simulations

Penetration Testing for AI/LLM systems

S3 - AI / LLM Threat Modeling and Infrastructure Hardening

Anticipating threats and reinforcing AI infrastructure.

AI Threat Modeling Assessment

AI Infrastructure Security Hardening

Solutions

Network and Infrastructure Security

Zero Trust and Private Access

Endpoint and Server Protection

Vulnerability and Patch Management

Data Protection

Application Security

Secure Software and DevSecOps

Cloud Security

Identity Access Governance

Governance, Risk and Compliance

Security Intelligence Operations

Incident Response

Accreditations

Accreditations

Dubai

Office 7, Floor 14

Makeen Tower, Al Mawkib St.

Al Zahiya Area

Abu Dhabi, UAE

Mezzanine Floor, Tower 3

Mohammad Thunayyan Al-Ghanem Street, Jibla

Kuwait City, Kuwait

+971 4 3383365

[email protected]

160 Kemp House, City Road

London, EC1V 2NX

United Kingdom

Company Number: 10276574

The website is our proprietary property and all source code, databases, functionality, software, website designs, audio, video, text, photographs, icons and graphics on the website (collectively, the “Content”) are owned or controlled by us or licensed to us, and are protected by copyright laws and various other intellectual property rights. The content and graphics may not be copied, in part or full, without the express permission of DTS Solution LLC (owner) who reserves all rights.

DTS Solution, DTS-Solution.com, the DTS Solution logo, HAWKEYE, FYNSEC, FRONTAL, HAWKEYE CSOC WIKI and Firewall Policy Builder are registered trademarks of DTS Solution, LLC.