Generative AI (GenAI) and Large Language Models (LLMs) are reshaping industries through innovation and efficiency. AI has enormous potential for automating customer interactions and improving decision-making. However, as AI usage grows, so do its associated risks such as Prompt injections, model theft, training data poisoning, and other AI-specific vulnerabilities have become part of the digital threat landscape.

Traditional security techniques are insufficient in securing AI systems. AI and LLM Red Teaming and Penetration Testing offer proactive, adversarial ways to identify vulnerabilities before they are exploited.

DTS Solution’s S3CURE/AI Framework combines Red Teaming and Penetration Testing to keep your AI systems secure, robust, and compliant. Let’s see how we can accomplish this.

The Growing Need for AI and LLM Red Teaming and Penetration Testing

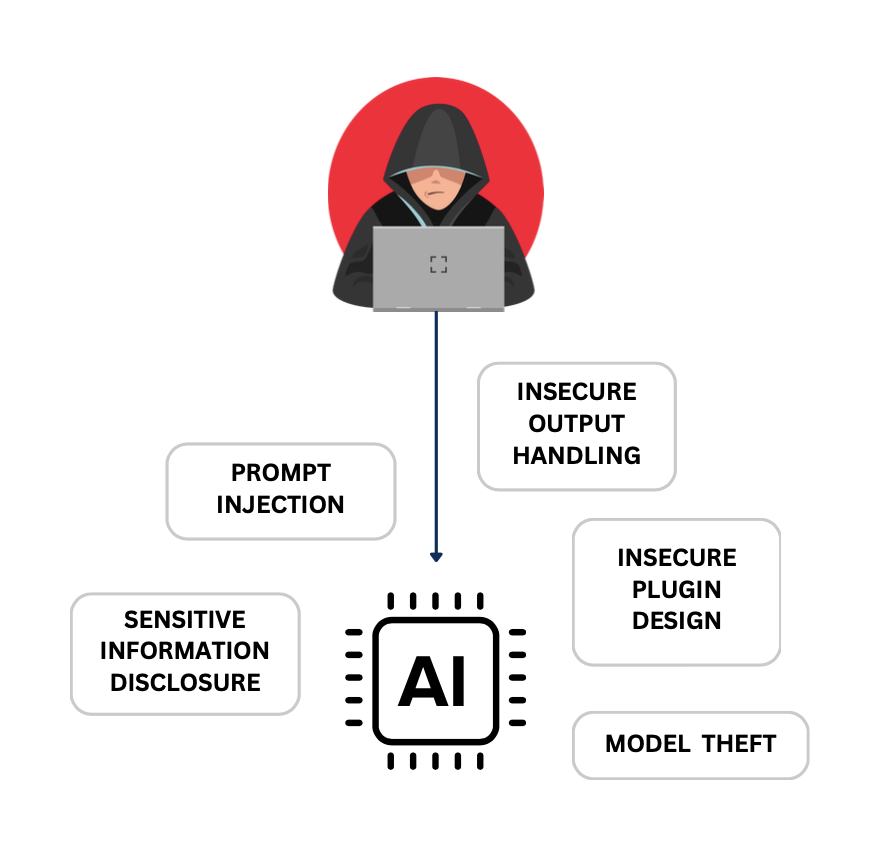

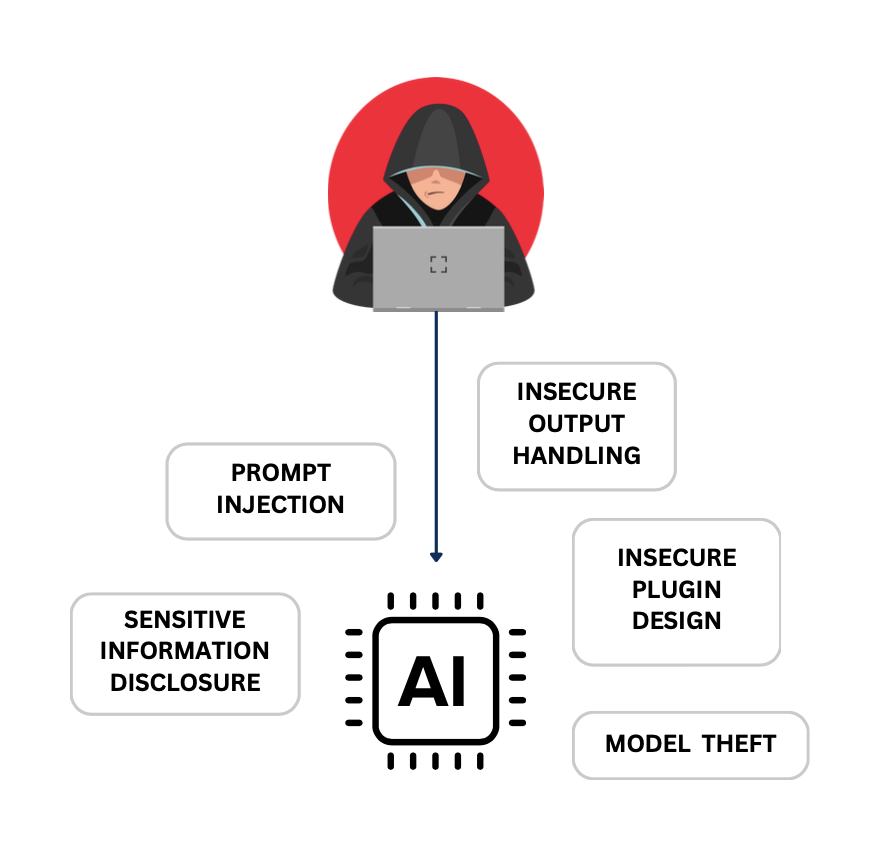

As AI and LLM technologies become more integrated in business activities, they create new vulnerabilities that traditional security solutions cannot handle. Proactive testing, such as Red Teaming and Penetration Testing, is required to uncover these dangers before bad actors may exploit them.

- Prompt Injection Attacks: entails creating malicious inputs to alter AI outputs, causing the system to deliver unexpected or damaging results.

- Training Data Poisoning: entails introducing compromised data into the model’s learning process, resulting in inaccurate or biased results.

- Model Inversion: entails extracting sensitive information from AI model outputs, jeopardizing data privacy and confidentiality.

- Inference Attacks: entail reconstructing private or sensitive data points by analyzing model responses, which may reveal vital information.

- Model Theft: entails stealing proprietary AI models, which undermine intellectual property and competitive advantages.

Generative AI (GenAI) and Large Language Models (LLMs) are reshaping industries through innovation and efficiency. AI has enormous potential for automating customer interactions and improving decision-making. However, as AI usage grows, so do its associated risks such as Prompt injections, model theft, training data poisoning, and other AI-specific vulnerabilities have become part of the digital threat landscape.

Traditional security techniques are insufficient in securing AI systems. AI and LLM Red Teaming and Penetration Testing offer proactive, adversarial ways to identify vulnerabilities before they are exploited.

DTS Solution’s S3CURE/AI Framework combines Red Teaming and Penetration Testing to keep your AI systems secure, robust, and compliant. Let’s see how we can accomplish this.

The Growing Need for AI and LLM Red Teaming and Penetration Testing

As AI and LLM technologies become more integrated in business activities, they create new vulnerabilities that traditional security solutions cannot handle. Proactive testing, such as Red Teaming and Penetration Testing, is required to uncover these dangers before bad actors may exploit them.

- Prompt Injection Attacks: entails creating malicious inputs to alter AI outputs, causing the system to deliver unexpected or damaging results.

- Training Data Poisoning: entails introducing compromised data into the model’s learning process, resulting in inaccurate or biased results.

- Model Inversion: entails extracting sensitive information from AI model outputs, jeopardizing data privacy and confidentiality.

- Inference Attacks: entail reconstructing private or sensitive data points by analyzing model responses, which may reveal vital information.

- Model Theft: entails stealing proprietary AI models, which undermine intellectual property and competitive advantages.

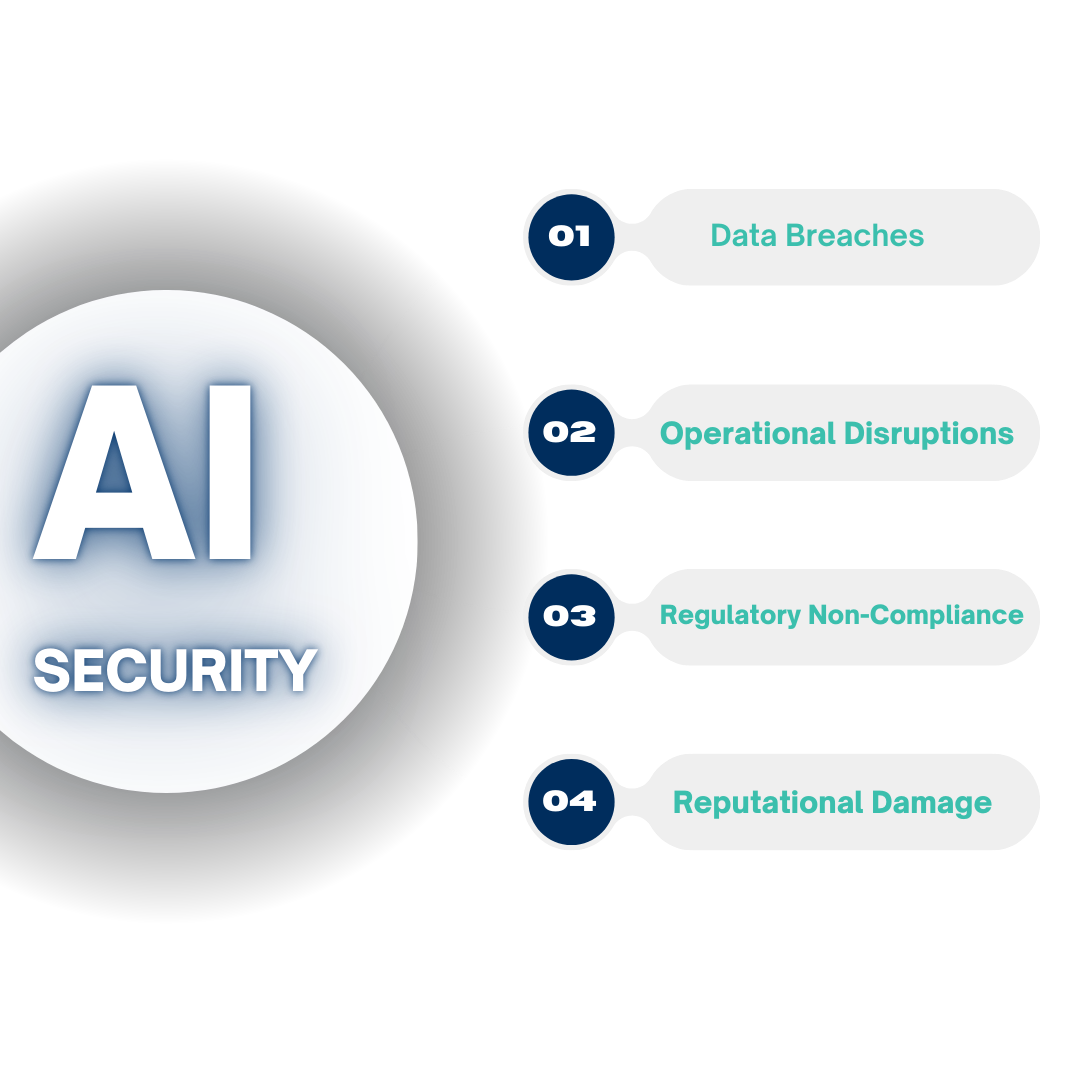

Consequences of Unmitigated Risks

Without rigorous and proactive testing, these vulnerabilities can result in significant business impacts:

- Data Breaches – Sensitive information can be exposed, leading to legal repercussions and loss of client trust.

- Operational Disruptions – AI systems compromised by adversaries can interrupt business processes, impacting productivity and efficiency.

- Regulatory Non-Compliance – Violations of data protection regulations such as GDPR, the EU AI Act, or HIPAA can result in substantial fines and legal liabilities.

- Reputational Damage – Security breaches can erode stakeholder confidence, damaging your organization’s reputation and market standing.

Consequences of Unmitigated Risks

Without rigorous and proactive testing, these vulnerabilities can result in significant business impacts:

- Data Breaches – Sensitive information can be exposed, leading to legal repercussions and loss of client trust.

- Operational Disruptions – AI systems compromised by adversaries can interrupt business processes, impacting productivity and efficiency.

- Regulatory Non-Compliance – Violations of data protection regulations such as GDPR, the EU AI Act, or HIPAA can result in substantial fines and legal liabilities.

- Reputational Damage – Security breaches can erode stakeholder confidence, damaging your organization’s reputation and market standing.

What is AI and LLM Red Teaming and Penetration Testing in S3CURE/AI?

DTS’s S3CURE/AI Framework offers a systematic, adversarial approach designed to safeguard your AI systems. By simulating real-world attacks and uncovering vulnerabilities, we ensure your AI models remain secure, resilient, and compliant. Our approach focuses on three core objectives:

- Simulating Real-World Threats: We develop sophisticated scenarios that mimic actual adversaries, targeting vulnerabilities in AI models, APIs, plugins, and data pipelines.

- Identifying Exploitable Weaknesses: Our team probes for potential flaws such as prompt manipulation, insecure outputs, and data exfiltration risks to uncover exploitable gaps in your AI security.

- Strengthening Defenses: We deliver actionable insights and strategic recommendations to reinforce your AI security controls, ensuring a robust defense against evolving threats.

Key Focus Areas of S3CURE/AI Red Teaming and Penetration Testing

- Prompt Injection and Jailbreaking: Crafting malicious inputs to bypass safety mechanisms, forcing AI systems to reveal sensitive information or perform unintended actions.

- Training Data Poisoning: Introducing corrupted data into training datasets to manipulate AI behavior and produce inaccurate or biased outputs.

- Model Inversion and Data Leakage: Extracting confidential information embedded in model outputs, compromising data privacy and security.

- Insecure Plugin and API Exploits: Identifying vulnerabilities in third-party integrations and APIs that can serve as entry points for attackers.

- Stress Testing Resilience: Evaluating how AI systems handle adversarial inputs and unexpected conditions to ensure reliability and robustness under pressure.

Our AI and LLM Red Teaming and Penetration Testing Methodology

1. Threat Modeling and Reconnaissance: We thoroughly map the attack surface of your AI system, pinpointing potential vulnerabilities across models, datasets, infrastructure, and third-party integrations.

2. Adversarial Simulation: We emulate real-world attack methods, including:

- Prompt Injection: Crafting inputs to manipulate model outputs.

- Model Inversion: Reconstructing sensitive information from model outputs.

- Data Poisoning: Compromising the integrity of AI training processes by introducing malicious data.

3. Attack Execution and Analysis: We perform controlled attack scenarios to assess your defenses and identify exploitable weaknesses within your system.

4. Impact Assessment and Recommendations: We provide comprehensive reports detailing discovered vulnerabilities, their potential impacts, and actionable, step-by-step recommendations to mitigate risks and enhance your AI system’s security posture.

What is AI and LLM Red Teaming and Penetration Testing in S3CURE/AI?

DTS’s S3CURE/AI Framework offers a systematic, adversarial approach designed to safeguard your AI systems. By simulating real-world attacks and uncovering vulnerabilities, we ensure your AI models remain secure, resilient, and compliant. Our approach focuses on three core objectives:

- Simulating Real-World Threats: We develop sophisticated scenarios that mimic actual adversaries, targeting vulnerabilities in AI models, APIs, plugins, and data pipelines.

- Identifying Exploitable Weaknesses: Our team probes for potential flaws such as prompt manipulation, insecure outputs, and data exfiltration risks to uncover exploitable gaps in your AI security.

- Strengthening Defenses: We deliver actionable insights and strategic recommendations to reinforce your AI security controls, ensuring a robust defense against evolving threats.

Key Focus Areas of S3CURE/AI Red Teaming and Penetration Testing

- Prompt Injection and Jailbreaking: Crafting malicious inputs to bypass safety mechanisms, forcing AI systems to reveal sensitive information or perform unintended actions.

- Training Data Poisoning: Introducing corrupted data into training datasets to manipulate AI behavior and produce inaccurate or biased outputs.

- Model Inversion and Data Leakage: Extracting confidential information embedded in model outputs, compromising data privacy and security.

- Insecure Plugin and API Exploits: Identifying vulnerabilities in third-party integrations and APIs that can serve as entry points for attackers.

- Stress Testing Resilience: Evaluating how AI systems handle adversarial inputs and unexpected conditions to ensure reliability and robustness under pressure.

Our AI and LLM Red Teaming and Penetration Testing Methodology

1. Threat Modeling and Reconnaissance: We thoroughly map the attack surface of your AI system, pinpointing potential vulnerabilities across models, datasets, infrastructure, and third-party integrations.

2. Adversarial Simulation: We emulate real-world attack methods, including:

- Prompt Injection: Crafting inputs to manipulate model outputs.

- Model Inversion: Reconstructing sensitive information from model outputs.

- Data Poisoning: Compromising the integrity of AI training processes by introducing malicious data.

3. Attack Execution and Analysis: We perform controlled attack scenarios to assess your defenses and identify exploitable weaknesses within your system.

4.Impact Assessment and Recommendations: We provide comprehensive reports detailing discovered vulnerabilities, their potential impacts, and actionable, step-by-step recommendations to mitigate risks and enhance your AI system’s security posture.

Why Choose DTS Solution?

The S3CURE/AI Framework offers comprehensive AI security advantages:

- Aligned with Industry Standards: Built on leading frameworks like MITRE ATLAS, OWASP LLM Top 10, and ISO/IEC 42001 for robust and standardized AI security.

- Expert Offensive Security Team: Our Red Team comprises seasoned professionals with deep expertise in AI exploitation and cybersecurity.

- Continuous Improvement: We integrate feedback loops and the latest threat intelligence to ensure your AI defenses stay adaptive and resilient.

- Integrated Governance: Our approach aligns with AI Governance Management Systems (AIMS), ensuring your AI deployments remain ethical, transparent, and compliant.

Real-World AI Threat Scenarios

1. Prompt Injection in Customer Support Bots

- Impact: Unauthorized disclosure of sensitive customer data, leading to GDPR violations.

- Defense: Simulated prompt injection exercises and robust input validation strategies.

2. Training Data Poisoning in Financial Models

- Impact: Biased or incorrect financial predictions, resulting in financial losses.

- Defense: Integrity checks and simulated data poisoning attacks to ensure dataset security.

3. Model Inversion in Healthcare AI

- Impact: Patient privacy violations and HIPAA compliance issues.

- Defense: Differential privacy techniques and rigorous leakage testing.

Key Takeaways

- Proactive Security: Red Teaming and Penetration Testing uncover AI vulnerabilities before they can be exploited.

- Holistic Protection: The S3CURE/AI Framework combines offensive security with governance for comprehensive AI defense.

- Adaptive Resilience: Continuous improvement strategies ensure your AI systems stay secure in a rapidly evolving threat landscape.

Conclusion

As AI systems advance, so are the threats they face. Ensuring the security of your GenAI and LLM deployments is more than simply a technical requirement; it is a strategic one. DTS Solution’s S3CURE/AI Framework provides a proactive and sophisticated approach to discovering vulnerabilities, enhancing defenses, and aligning with industry standards. By integrating Red Teaming, Penetration Testing, and AI governance, we empower your organization to confidently innovate while staying protected against evolving threats.

Discover how DTS Solution’s S3CURE/AI can fortify your AI systems: Generative AI Security

Why Choose DTS Solution?

The S3CURE/AI Framework offers comprehensive AI security advantages:

- Aligned with Industry Standards: Built on leading frameworks like MITRE ATLAS, OWASP LLM Top 10, and ISO/IEC 42001 for robust and standardized AI security.

- Expert Offensive Security Team: Our Red Team comprises seasoned professionals with deep expertise in AI exploitation and cybersecurity.

- Continuous Improvement: We integrate feedback loops and the latest threat intelligence to ensure your AI defenses stay adaptive and resilient.

- Integrated Governance: Our approach aligns with AI Governance Management Systems (AIMS), ensuring your AI deployments remain ethical, transparent, and compliant.

Real-World AI Threat Scenarios

1. Prompt Injection in Customer Support Bots

- Impact: Unauthorized disclosure of sensitive customer data, leading to GDPR violations.

- Defense: Simulated prompt injection exercises and robust input validation strategies.

2. Training Data Poisoning in Financial Models

- Impact: Biased or incorrect financial predictions, resulting in financial losses.

- Defense: Integrity checks and simulated data poisoning attacks to ensure dataset security.

3. Model Inversion in Healthcare AI

- Impact: Patient privacy violations and HIPAA compliance issues.

- Defense: Differential privacy techniques and rigorous leakage testing.

Key Takeaways

- Proactive Security: Red Teaming and Penetration Testing uncover AI vulnerabilities before they can be exploited.

- Holistic Protection: The S3CURE/AI Framework combines offensive security with governance for comprehensive AI defense.

- Adaptive Resilience: Continuous improvement strategies ensure your AI systems stay secure in a rapidly evolving threat landscape.

Conclusion

As AI systems advance, so are the threats they face. Ensuring the security of your GenAI and LLM deployments is more than simply a technical requirement; it is a strategic one. DTS Solution’s S3CURE/AI Framework provides a proactive and sophisticated approach to discovering vulnerabilities, enhancing defenses, and aligning with industry standards. By integrating Red Teaming, Penetration Testing, and AI governance, we empower your organization to confidently innovate while staying protected against evolving threats.

Discover how DTS Solution’s S3CURE/AI can fortify your AI systems: Generative AI Security

See also: