Big Data Security Assessment

- Big data makes it possible for you to gain more complete answers because you have more information.

- More complete answers mean more confidence in the data—which means a completely different approach to tackling problems.

Big Data Security Assessment

Big Data refers to datasets whose size and/or structure is beyond the ability of traditional software tools or database systems to store, process, and analyze within reasonable timeframes.

HADOOP is one of the main computing environment built on top of a distributed clustered file system (HDFS) that was designed specifically for large scale data operations and embraced by enterprises.

- Big data makes it possible for you to gain more complete answers because you have more information.

- More complete answers mean more confidence in the data—which means a completely different approach to tackling problems.

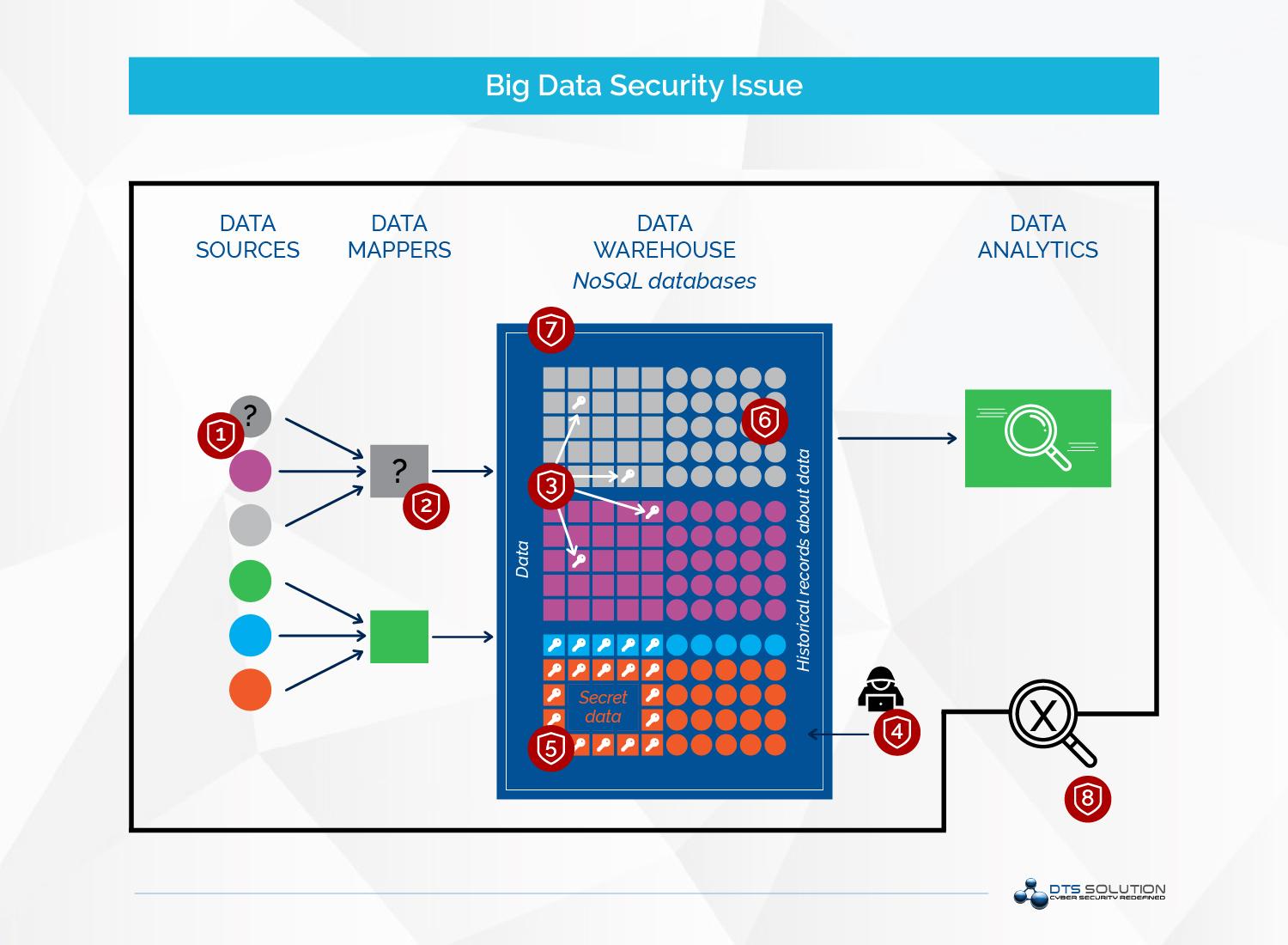

- The primary goal of an attacker is to obtain sensitive data that sits in a Big Data cluster.Organizations collect and process huge sensitive information regarding customers, employees, IPs (intellectual property), and financial information. Such confidential information are aggregated and centralized in one place for analysis in order to increase their value. This centralization of data is valuable target for attackers and those confidential information might be exposed.

- attacks may include attempting to destroy or modify data or prevent availability of this platform.

Understanding architecture and cluster composition of the ecosystem in place is the first step to putting together for security strategy. it is important to understand each component interface as an attack target.

Each component offers attacker a specific set of potential exploits, while defenders have a corresponding set of options for attack detection and prevention.

- The primary goal of an attacker is to obtain sensitive data that sits in a Big Data cluster.Organizations collect and process huge sensitive information regarding customers, employees, IPs (intellectual property), and financial information. Such confidential information are aggregated and centralized in one place for analysis in order to increase their value. This centralization of data is valuable target for attackers and those confidential information might be exposed.

- attacks may include attempting to destroy or modify data or prevent availability of this platform.

Understanding architecture and cluster composition of the ecosystem in place is the first step to putting together for security strategy. it is important to understand each component interface as an attack target.

Each component offers attacker a specific set of potential exploits, while defenders have a corresponding set of options for attack detection and prevention.

Threats on Big Data Platforms

Ensure the big data cluster APIs be protected from code and command injection, buffer overflow attacks.

Threats on Big Data Platforms

Encryption can help to protect against attempts to access data outside established application interfaces. Unauthorized stealing of archives or directly reading files from disk, can be mitigated using encryption at the file or HDFS layer .This ensures files are protected against direct access by users as only the file services are supplied with the encryption keys. Third parties products can help to provide advanced transparent encryption options for both HDFS and non-HDFS file formats. Transport Layer Security (TLS) provides confidentiality of data and provides authentication via certificates and data integrity verification.

Holistic Approach for Big Data Security Operation

- Segregate administrative roles and restrict unwanted access to a minimum

- Direct access to files or data is shall be addressed through a combination of role based-authorization, access control lists, file permissions, and segregation of administrative roles

- Ensure to authenticate nodes before they join a cluster. If an attacker can add a new node they control to the cluster, they can exfiltrate data. Certificate-based identity options can provide strong authentication and improve security.

- Tokenization, Masking and data element encryption tools help to support data centric security implementation when the systems that process data cannot be fully trusted, or in cases we don't want to share data with users.

- Keeping track of encryption keys, certificates, open-source libraries up to date as it may be common for hundreds of nodes unintentionally run different configurations and kept unpatched.

-

Ensure to use Configuration management tools, recommended configurations and pre-deployment checklists

Holistic Approach for Big Data Security Operation

- Segregate administrative roles and restrict unwanted access to a minimum

- Direct access to files or data is shall be addressed through a combination of role based-authorization, access control lists, file permissions, and segregation of administrative roles

- Ensure to authenticate nodes before they join a cluster. If an attacker can add a new node they control to the cluster, they can exfiltrate data. Certificate-based identity options can provide strong authentication and improve security.

- Tokenization, Masking and data element encryption tools help to support data centric security implementation when the systems that process data cannot be fully trusted, or in cases we don't want to share data with users.

- Keeping track of encryption keys, certificates, open-source libraries up to date as it may be common for hundreds of nodes unintentionally run different configurations and kept unpatched.

-

Ensure to use Configuration management tools, recommended configurations and pre-deployment checklists

Security Solutions

Apache Ranger-Ranger is a policy administration tool for Hadoop clusters. It includes a broad set of management functions, including auditing, key management, and fine grained data access policies across HDFS, Hive, YARN, Solr, Kafka and other modules.

Apache Ambari-Ambari is a facility for provisioning and managing Hadoop clusters. It helps administrators set configurations and propagate changes to the entire cluster.

Apache Knox-You can think of Knox as a Hadoop firewall. More precisely it is an API gateway. It handles HTTP and RESTful requests, enforcing authentication and usage policies on inbound requests and blocking everything else.

Monitoring-You can think of Knox as a Hadoop firewall. More precisely it is an API gateway. It handles HTTP and RESTful requests, enforcing authentication and usage policies on inbound requests and blocking everything else.

Security Solutions

Apache Ambari-Ambari is a facility for provisioning and managing Hadoop clusters. It helps administrators set configurations and propagate changes to the entire cluster.

Monitoring-Hive, PIQL, Impala, Spark SQL and similar modules offer SQL or pseudo-SQL syntax. This enables you to leverage activity monitoring, dynamic masking, redaction, and tokenization technologies originally developed for relational platforms..

See also: