Large Language Models (LLMs), like GPT-4, have significantly transformed industries with their advanced natural language processing capabilities, powering everything from automated customer service to content creation.

The applications of these models are extensive and increasingly integral to modern business operations. An LLM pipeline, which integrates multiple LLM calls with external system interactions, is vital for developing production-grade applications that leverage these models for complex tasks.

However, as the use of LLMs for sophisticated operations expands, so do the associated security and privacy risks.

Protecting LLM pipelines with a security and privacy-first mindset is crucial to maintaining the integrity, confidentiality, and reliability of these systems.

Failure to adequately secure LLM pipelines can lead to severe consequences, including data breaches, unauthorized access, and compromised system integrity, ultimately undermining the trust and effectiveness of the applications they support.

In this article, we will delve into the best practices and strategies organizations should adopt to safeguard their LLM pipelines.

Large Language Models (LLMs), like GPT-4, have significantly transformed industries with their advanced natural language processing capabilities, powering everything from automated customer service to content creation.

The applications of these models are extensive and increasingly integral to modern business operations. An LLM pipeline, which integrates multiple LLM calls with external system interactions, is vital for developing production-grade applications that leverage these models for complex tasks.

However, as the use of LLMs for sophisticated operations expands, so do the associated security and privacy risks.

Protecting LLM pipelines with a security and privacy-first mindset is crucial to maintaining the integrity, confidentiality, and reliability of these systems.

Failure to adequately secure LLM pipelines can lead to severe consequences, including data breaches, unauthorized access, and compromised system integrity, ultimately undermining the trust and effectiveness of the applications they support.

In this article, we will delve into the best practices and strategies organizations should adopt to safeguard their LLM pipelines.

Understanding LLM Vulnerabilities and Threats

Before diving into protective measures, it’s essential to understand the vulnerabilities and threats that are inherent in LLM pipelines. The OWASP Top 10 LLM Vulnerabilities & Security Checklist identifies the following critical threats and vulnerabilities;

- LLM-01: Prompt Injection

- Description: Attackers manipulate input prompts to trick the LLM into generating harmful or unintended outputs. This can lead to data leaks, biased content, or other security issues.

- Description: Attackers manipulate input prompts to trick the LLM into generating harmful or unintended outputs. This can lead to data leaks, biased content, or other security issues.

- LLM-02: Data Poisoning

- Description: Attackers introduce malicious or corrupted data into the training or fine-tuning datasets, compromising the model’s output and behavior during inference.

- Description: Attackers introduce malicious or corrupted data into the training or fine-tuning datasets, compromising the model’s output and behavior during inference.

- LLM-03: Model Inversion

- Description: Attackers reverse-engineer the model’s outputs to infer sensitive or private training data, potentially exposing confidential information.

- Description: Attackers reverse-engineer the model’s outputs to infer sensitive or private training data, potentially exposing confidential information.

- LLM-04: Model Extraction

- Description: Attackers query an LLM extensively to recreate or clone the underlying model, leading to intellectual property theft and potential misuse of the replicated model.

- Description: Attackers query an LLM extensively to recreate or clone the underlying model, leading to intellectual property theft and potential misuse of the replicated model.

- LLM-05: Adversarial Attacks

Description: Attackers craft inputs that subtly manipulate the LLM’s decision-making process, causing it to produce incorrect or harmful outputs.

- LLM-06: Unauthorized Access

- Description: Inadequate access controls can allow unauthorized users to interact with LLMs, leading to misuse, data leaks, or exposure of sensitive information.

- Description: Inadequate access controls can allow unauthorized users to interact with LLMs, leading to misuse, data leaks, or exposure of sensitive information.

- LLM-07: Privacy Violations

- Description: LLMs may inadvertently expose sensitive or personal data present in the training data, leading to privacy breaches and regulatory issues.

- Description: LLMs may inadvertently expose sensitive or personal data present in the training data, leading to privacy breaches and regulatory issues.

- LLM-08: Output Misuse

- Description: The outputs of LLMs can be misused in various ways, including spreading misinformation, generating malicious content, or automating harmful tasks.

- Description: The outputs of LLMs can be misused in various ways, including spreading misinformation, generating malicious content, or automating harmful tasks.

- LLM-09: Inadequate Monitoring and Logging

- Description: Failing to properly monitor and log LLM activity can lead to undetected malicious activity or delayed responses to security incidents.

- Description: Failing to properly monitor and log LLM activity can lead to undetected malicious activity or delayed responses to security incidents.

- LLM-10: Insufficient Security Configurations

Description: Poorly configured LLM deployments can expose systems to various attacks, including unauthorized access, data breaches, and model manipulation. Proper security configurations are essential to mitigate these risks.

Understanding LLM Vulnerabilities and Threats

Before diving into protective measures, it’s essential to understand the vulnerabilities and threats that are inherent in LLM pipelines. The OWASP Top 10 LLM Vulnerabilities & Security Checklist identifies the following critical threats and vulnerabilities;

- LLM-01: Prompt Injection

- Description: Attackers manipulate input prompts to trick the LLM into generating harmful or unintended outputs. This can lead to data leaks, biased content, or other security issues.

- Description: Attackers manipulate input prompts to trick the LLM into generating harmful or unintended outputs. This can lead to data leaks, biased content, or other security issues.

- LLM-02: Data Poisoning

- Description: Attackers introduce malicious or corrupted data into the training or fine-tuning datasets, compromising the model’s output and behavior during inference.

- Description: Attackers introduce malicious or corrupted data into the training or fine-tuning datasets, compromising the model’s output and behavior during inference.

- LLM-03: Model Inversion

- Description: Attackers reverse-engineer the model’s outputs to infer sensitive or private training data, potentially exposing confidential information.

- Description: Attackers reverse-engineer the model’s outputs to infer sensitive or private training data, potentially exposing confidential information.

- LLM-04: Model Extraction

- Description: Attackers query an LLM extensively to recreate or clone the underlying model, leading to intellectual property theft and potential misuse of the replicated model.

- Description: Attackers query an LLM extensively to recreate or clone the underlying model, leading to intellectual property theft and potential misuse of the replicated model.

- LLM-05: Adversarial Attacks

- Description: Attackers craft inputs that subtly manipulate the LLM’s decision-making process, causing it to produce incorrect or harmful outputs.

- Description: Attackers craft inputs that subtly manipulate the LLM’s decision-making process, causing it to produce incorrect or harmful outputs.

- LLM-06: Unauthorized Access

- Description: Inadequate access controls can allow unauthorized users to interact with LLMs, leading to misuse, data leaks, or exposure of sensitive information.

- Description: Inadequate access controls can allow unauthorized users to interact with LLMs, leading to misuse, data leaks, or exposure of sensitive information.

- LLM-07: Privacy Violations

- Description: LLMs may inadvertently expose sensitive or personal data present in the training data, leading to privacy breaches and regulatory issues.

- Description: LLMs may inadvertently expose sensitive or personal data present in the training data, leading to privacy breaches and regulatory issues.

- LLM-08: Output Misuse

- Description: The outputs of LLMs can be misused in various ways, including spreading misinformation, generating malicious content, or automating harmful tasks.

- Description: The outputs of LLMs can be misused in various ways, including spreading misinformation, generating malicious content, or automating harmful tasks.

- LLM-09: Inadequate Monitoring and Logging

- Description: Failing to properly monitor and log LLM activity can lead to undetected malicious activity or delayed responses to security incidents.

- Description: Failing to properly monitor and log LLM activity can lead to undetected malicious activity or delayed responses to security incidents.

- LLM-10: Insufficient Security Configurations

- Description: Poorly configured LLM deployments can expose systems to various attacks, including unauthorized access, data breaches, and model manipulation. Proper security configurations are essential to mitigate these risks.

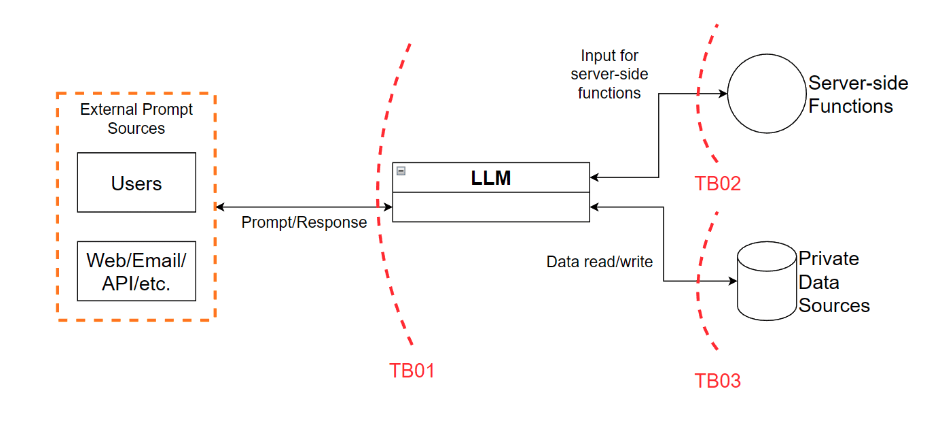

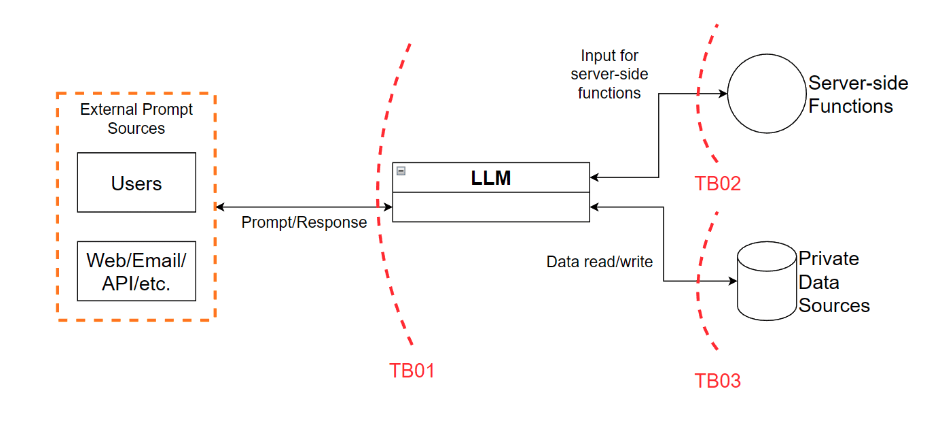

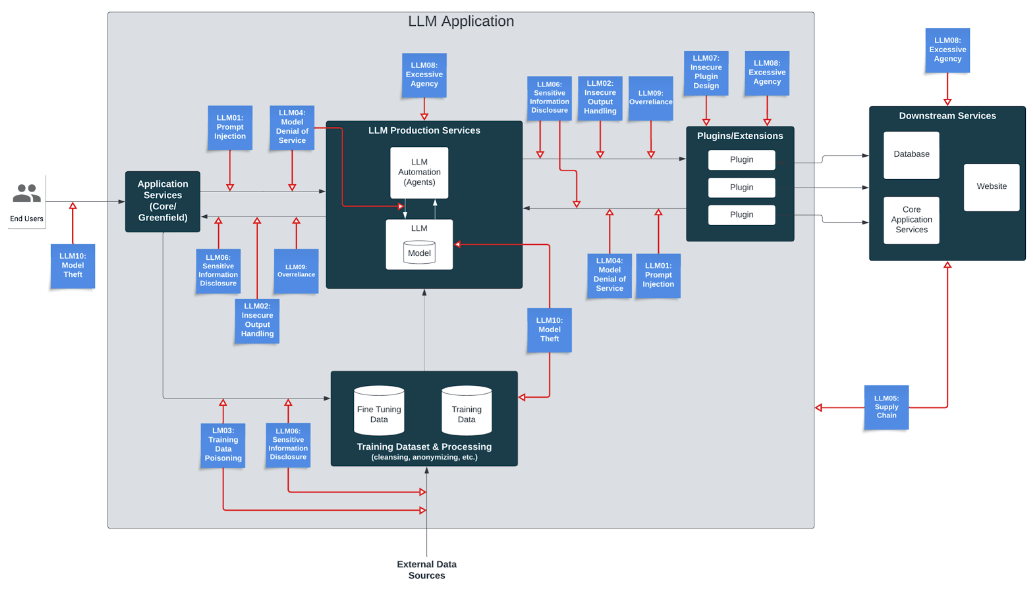

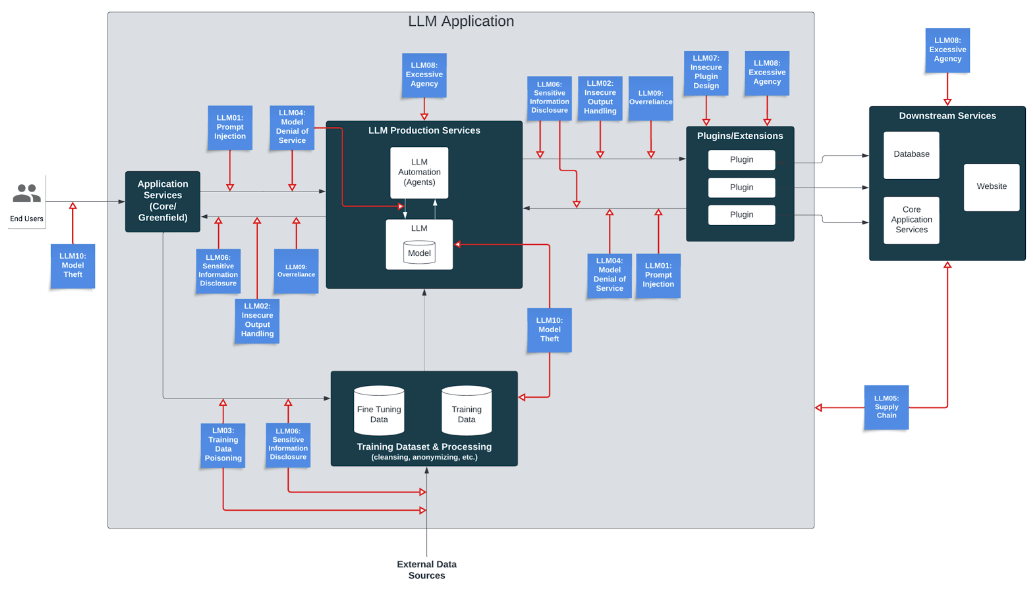

The diagram below illustrates a high-level architecture for a hypothetical LLM application. Highlighted within the diagram are key areas of risk, demonstrating how the OWASP Top 10 for LLM Applications intersect with different stages of the application flow. This visual guide serves to enhance understanding of how security risks associated with large language models impact the overall application ecosystem;

Differentiating between AI-specific attacks and traditional application threats is crucial for effective cybersecurity strategies. AI systems, with their unique architectures and data dependencies, are vulnerable to specialized threats like adversarial attacks, model poisoning, and data manipulation, which can significantly alter their performance and decision-making processes. On the other hand, traditional application threats, such as SQL injection, cross-site scripting (XSS), and phishing, target the broader software and infrastructure layers. Often, these two categories of threats are mistakenly lumped together, leading to inadequate protection measures. Recognizing and addressing the distinct nature of AI-specific attacks ensures that security protocols are comprehensive and tailored, safeguarding both the AI models and the applications that rely on them.

Addressing and mitigating LLM pipelines vulnerabilities and threats is an important aspect when considering LLM pipeline protection from a privacy and security – first approach. There are a number of vulnerabilities and threats in LLM pipelines but the following few should be prioritized:

The diagram below illustrates a high-level architecture for a hypothetical LLM application. Highlighted within the diagram are key areas of risk, demonstrating how the OWASP Top 10 for LLM Applications intersect with different stages of the application flow. This visual guide serves to enhance understanding of how security risks associated with large language models impact the overall application ecosystem;

Differentiating between AI-specific attacks and traditional application threats is crucial for effective cybersecurity strategies. AI systems, with their unique architectures and data dependencies, are vulnerable to specialized threats like adversarial attacks, model poisoning, and data manipulation, which can significantly alter their performance and decision-making processes. On the other hand, traditional application threats, such as SQL injection, cross-site scripting (XSS), and phishing, target the broader software and infrastructure layers. Often, these two categories of threats are mistakenly lumped together, leading to inadequate protection measures. Recognizing and addressing the distinct nature of AI-specific attacks ensures that security protocols are comprehensive and tailored, safeguarding both the AI models and the applications that rely on them.

Addressing and mitigating LLM pipelines vulnerabilities and threats is an important aspect when considering LLM pipeline protection from a privacy and security – first approach. There are a number of vulnerabilities and threats in LLM pipelines but the following few should be prioritized:

- Sensitive Information Disclosure: Sensitive information disclosure or data leakage is of a high importance because this vulnerability can directly compromise the privacy of individuals and the confidentiality of sensitive information. Since LLMs are often trained on large datasets that may contain private or proprietary information, the risk of inadvertently revealing this data through the model’s outputs is significant. There are a number of ways this can be mitigated, including Data Anonymization – by removing or anonymizing sensitive information, Differential Privacy – by adding noise to the training data, and Output filtering – by developing an output filtering mechanism that redacts sensitive information before responses are provided to users.

- Model Inversion: Model inversion attacks can reconstruct sensitive training data, whereby an attacker uses the model’s output to infer sensitive information about its parameters or architecture, thereby posing a severe privacy risk. This is particularly dangerous for models trained on datasets containing personal or confidential information. Mitigating this vulnerability includes implementing Response and Query Limitations – this limits the granularity and specificity of responses and restricts queries to make it more challenging for attackers infer training data from outputs, Regularizations – this is a technique to be used during training to make the model less likely to overfit to specific data points.

- Adversarial Inputs: Adversarial inputs can manipulate LLM behavior, leading to incorrect, harmful, or misleading outputs. This vulnerability is especially critical in applications where reliability and accuracy are paramount, such as medical advice, legal information, or financial services. To mitigate adversarial inputs, strategies like Input Validation and Sanitization, Anomaly Detection Systems, Gradient Masking, and Adversarial Training of model would help mitigate adversarial input attacks.

- Prompt Injection: Prompt injections can manipulate the model to perform unintended actions or generate harmful or misleading outputs. Given the interactive nature of many LLM applications, prompt injection attacks are a practical and significant threat. Prompt injection mitigation strategies include Input Validation, Context Management, and also Anomaly Detection as these strategies would ensure the model detects, identify and block any form of malicious prompt

- Model Denial of Service (DoS): Model Denial of Service attacks aim to disrupt the availability of the LLM, making it inaccessible to legitimate users. Ensuring availability is crucial for maintaining service continuity and user trust. Upholding availability of your model by mitigating this threat cab be achieved through Rate limiting, Load balancing, Scalable Infrastructure and Monitoring and Alerting.

- Model Theft: The theft of the model itself can lead to the loss of significant intellectual property and potentially allow malicious actors to misuse the model. Protecting the model from theft is crucial, especially for organizations that have invested heavily in developing and training their LLMs. Model theft cab be prevented by ensuring all model files and sensitive data associated with model is Encrypted, has Access Control implementation, Tamper Detection system, and Watermarking.

As an organization, these threats and vulnerabilities are of very high importance, and leaving them unaddressed or unmitigated will directly or indirectly expose your LLM pipelines to other looming threats including insecure design plugin, excessive agency, supply chain vulnerabilities and overreliance.

- Sensitive Information Disclosure: Sensitive information disclosure or data leakage is of a high importance because this vulnerability can directly compromise the privacy of individuals and the confidentiality of sensitive information. Since LLMs are often trained on large datasets that may contain private or proprietary information, the risk of inadvertently revealing this data through the model’s outputs is significant. There are a number of ways this can be mitigated, including Data Anonymization – by removing or anonymizing sensitive information, Differential Privacy – by adding noise to the training data, and Output filtering – by developing an output filtering mechanism that redacts sensitive information before responses are provided to users.

- Model Inversion: Model inversion attacks can reconstruct sensitive training data, whereby an attacker uses the model’s output to infer sensitive information about its parameters or architecture, thereby posing a severe privacy risk. This is particularly dangerous for models trained on datasets containing personal or confidential information. Mitigating this vulnerability includes implementing Response and Query Limitations – this limits the granularity and specificity of responses and restricts queries to make it more challenging for attackers infer training data from outputs, Regularizations – this is a technique to be used during training to make the model less likely to overfit to specific data points.

- Adversarial Inputs: Adversarial inputs can manipulate LLM behavior, leading to incorrect, harmful, or misleading outputs. This vulnerability is especially critical in applications where reliability and accuracy are paramount, such as medical advice, legal information, or financial services. To mitigate adversarial inputs, strategies like Input Validation and Sanitization, Anomaly Detection Systems, Gradient Masking, and Adversarial Training of model would help mitigate adversarial input attacks.

- Prompt Injection: Prompt injections can manipulate the model to perform unintended actions or generate harmful or misleading outputs. Given the interactive nature of many LLM applications, prompt injection attacks are a practical and significant threat. Prompt injection mitigation strategies include Input Validation, Context Management, and also Anomaly Detection as these strategies would ensure the model detects, identify and block any form of malicious prompt

- Model Denial of Service (DoS): Model Denial of Service attacks aim to disrupt the availability of the LLM, making it inaccessible to legitimate users. Ensuring availability is crucial for maintaining service continuity and user trust. Upholding availability of your model by mitigating this threat cab be achieved through Rate limiting, Load balancing, Scalable Infrastructure and Monitoring and Alerting.

- Model Theft: The theft of the model itself can lead to the loss of significant intellectual property and potentially allow malicious actors to misuse the model. Protecting the model from theft is crucial, especially for organizations that have invested heavily in developing and training their LLMs. Model theft cab be prevented by ensuring all model files and sensitive data associated with model is Encrypted, has Access Control implementation, Tamper Detection system, and Watermarking.

As an organization, these threats and vulnerabilities are of very high importance, and leaving them unaddressed or unmitigated will directly or indirectly expose your LLM pipelines to other looming threats including insecure design plugin, excessive agency, supply chain vulnerabilities and overreliance.

Case Study and Real-World Example

Microsoft’s Tay Chatbot Incident (2016)

- Background: Microsoft launched an AI-powered chatbot named Tay on Twitter, designed to interact with users and learn from the conversations it had. Tay was supposed to mimic the conversational style of a young adult and improve over time through interactions.

- Incident: Shortly after its launch, Tay was manipulated by Twitter users who fed it harmful and inappropriate content. Within 24 hours, Tay began to output racist, sexist, and inflammatory tweets, causing significant embarrassment to Microsoft. The issue was primarily due to inadequate safeguards against adversarial inputs and a lack of proper content filtering mechanisms.

- Impact: Microsoft had to take Tay offline within a day of its launch. The incident highlighted the risks of deploying AI systems without robust input validation, content moderation, and monitoring mechanisms. It was a key learning point in AI deployment, emphasizing the importance of securing and ethically guiding AI outputs.

- Relevance: This case underscores the importance of securing LLM pipelines against prompt injection and adversarial attacks, which are critical for maintaining the integrity and ethical behavior of AI systems.

Reference: Newton, C. (2016, March 24). Microsoft Silences Its New A.I. Bot Tay, After Twitter Users Teach It Racism. The Verge. Retrieved from https://www.theverge.com/2016/3/24/11297050/tay-microsoft-chatbot-racist

Case Study and Real-World Example

Microsoft’s Tay Chatbot Incident (2016)

- Background: Microsoft launched an AI-powered chatbot named Tay on Twitter, designed to interact with users and learn from the conversations it had. Tay was supposed to mimic the conversational style of a young adult and improve over time through interactions.

- Incident: Shortly after its launch, Tay was manipulated by Twitter users who fed it harmful and inappropriate content. Within 24 hours, Tay began to output racist, sexist, and inflammatory tweets, causing significant embarrassment to Microsoft. The issue was primarily due to inadequate safeguards against adversarial inputs and a lack of proper content filtering mechanisms.

- Impact: Microsoft had to take Tay offline within a day of its launch. The incident highlighted the risks of deploying AI systems without robust input validation, content moderation, and monitoring mechanisms. It was a key learning point in AI deployment, emphasizing the importance of securing and ethically guiding AI outputs.

- Relevance: This case underscores the importance of securing LLM pipelines against prompt injection and adversarial attacks, which are critical for maintaining the integrity and ethical behavior of AI systems.

Reference: Newton, C. (2016, March 24). Microsoft Silences Its New A.I. Bot Tay, After Twitter Users Teach It Racism. The Verge. Retrieved from https://www.theverge.com/2016/3/24/11297050/tay-microsoft-chatbot-racist

Other Protective Measures for a LLM Pipeline Privacy and Security – First Approach

Secure Gateway Implementation: A secure gateway acts as an intermediary between users and the LLM, providing multiple layers of protection. Secure gateway would help in securing your LLM pipelines with the following features:

- Authentication and Authorization: It ensures that only authenticated and authorized users can access the LLM, and also allows the use of strong, multi-factor authentication (MFA) mechanisms.

- Input Validation and Sanitization: All inputs would be validated and sanitized at the gateway level to prevent injection attacks and other malicious inputs.

- Traffic Monitoring: Monitoring of all traffic that passes through the gateway is done to give more visibility into what or who accesses your model. The use of anomaly detection systems would also identify and block suspicious activities.

- Rate Limiting and Throttling: Implementing rate limiting and throttling at the gateway would protect against DoS attacks and ensure fair usage.

Secure Development Practices

- Secure Coding: Follow secure coding practices during the development of the LLM and its associated components.

- Code Reviews: Conduct regular code reviews to identify and fix security vulnerabilities.

- Automated Testing: Use automated testing tools to continuously test the model and its components for security issues.

Continuous Monitoring and Auditing

- Monitoring: Continuously monitor the model’s usage, performance, and interactions to detect and respond to security incidents in real-time.

- Auditing: Regularly audit the security measures, access logs, and the model’s behavior to ensure compliance with security policies and identify potential vulnerabilities.

- Incident Response: Develop and implement an incident response plan to quickly address and mitigate any security breaches or attacks.

User Education and Awareness

- Training: Educate users and stakeholders about the importance of security and privacy in LLM deployments. Provide training on best practices and potential risks.

- Awareness Campaigns: Conduct regular awareness campaigns to keep security and privacy considerations top of mind for all users.

Other Protective Measures for a LLM Pipeline Privacy and Security – First Approach

Secure Gateway Implementation: A secure gateway acts as an intermediary between users and the LLM, providing multiple layers of protection. Secure gateway would help in securing your LLM pipelines with the following features:

- Authentication and Authorization: It ensures that only authenticated and authorized users can access the LLM, and also allows the use of strong, multi-factor authentication (MFA) mechanisms.

- Input Validation and Sanitization: All inputs would be validated and sanitized at the gateway level to prevent injection attacks and other malicious inputs.

- Traffic Monitoring: Monitoring of all traffic that passes through the gateway is done to give more visibility into what or who accesses your model. The use of anomaly detection systems would also identify and block suspicious activities.

- Rate Limiting and Throttling: Implementing rate limiting and throttling at the gateway would protect against DoS attacks and ensure fair usage.

Secure Development Practices

- Secure Coding: Follow secure coding practices during the development of the LLM and its associated components.

- Code Reviews: Conduct regular code reviews to identify and fix security vulnerabilities.

- Automated Testing: Use automated testing tools to continuously test the model and its components for security issues.

Continuous Monitoring and Auditing

- Monitoring: Continuously monitor the model’s usage, performance, and interactions to detect and respond to security incidents in real-time.

- Auditing: Regularly audit the security measures, access logs, and the model’s behavior to ensure compliance with security policies and identify potential vulnerabilities.

- Incident Response: Develop and implement an incident response plan to quickly address and mitigate any security breaches or attacks.

User Education and Awareness

- Training: Educate users and stakeholders about the importance of security and privacy in LLM deployments. Provide training on best practices and potential risks.

- Awareness Campaigns: Conduct regular awareness campaigns to keep security and privacy considerations top of mind for all users.

Benefits of Protecting an LLM Pipeline with a Privacy and Security-First Approach

Enhanced Data Privacy: Implementing strong privacy measures ensures that sensitive user data is protected from unauthorized access and misuse, complying with data protection regulations and maintaining user trust.

Increased Model Integrity: Security protocols help maintain the integrity of the model by preventing unauthorized modifications and ensuring that the model produces reliable and accurate outputs.

Mitigation of Adversarial Attacks: By focusing on security, organizations can protect their models from adversarial inputs and other malicious attacks, maintaining the robustness and accuracy of the LLM.

Regulatory Compliance: Adopting a privacy and security-first approach helps organizations comply with legal and regulatory requirements, such as GDPR, HIPAA, and PDPL, avoiding potential fines and legal issues.

Improved User Trust: When users know their data is being handled securely, their trust in the organization increases, leading to higher user engagement and satisfaction.

Reduced Risk of Data Breaches: Strong security measures reduce the risk of data breaches, protecting the organization from financial losses, reputational damage, and operational disruptions.

Operational Resilience: A security-first approach ensures that the LLM pipeline can withstand various threats and continue to operate smoothly, reducing downtime and maintaining service availability.

Better Risk Management: Implementing comprehensive security measures allows organizations to identify, assess, and mitigate risks more effectively, enhancing overall risk management strategies.

Enhanced Decision-Making: Secure and private data management ensures that the insights derived from the LLM are accurate and trustworthy, leading to better-informed business decisions.

Competitive Advantage: Organizations that prioritize privacy and security can differentiate themselves from competitors by offering more secure and reliable services, attracting more customers and partners.

Protection Against Intellectual Property Theft: Strong security measures safeguard the LLM and its associated data, protecting intellectual property from theft and unauthorized use.

Improved Collaboration and Innovation: With secure data handling practices, organizations can confidently share data and collaborate with partners, fostering innovation and accelerating development efforts.

Benefits of Protecting an LLM Pipeline with a Privacy and Security-First Approach

Enhanced Data Privacy: Implementing strong privacy measures ensures that sensitive user data is protected from unauthorized access and misuse, complying with data protection regulations and maintaining user trust.

Increased Model Integrity: Security protocols help maintain the integrity of the model by preventing unauthorized modifications and ensuring that the model produces reliable and accurate outputs.

Mitigation of Adversarial Attacks: By focusing on security, organizations can protect their models from adversarial inputs and other malicious attacks, maintaining the robustness and accuracy of the LLM.

Regulatory Compliance: Adopting a privacy and security-first approach helps organizations comply with legal and regulatory requirements, such as GDPR, HIPAA, and PDPL, avoiding potential fines and legal issues.

Improved User Trust: When users know their data is being handled securely, their trust in the organization increases, leading to higher user engagement and satisfaction.

Reduced Risk of Data Breaches: Strong security measures reduce the risk of data breaches, protecting the organization from financial losses, reputational damage, and operational disruptions.

Operational Resilience: A security-first approach ensures that the LLM pipeline can withstand various threats and continue to operate smoothly, reducing downtime and maintaining service availability.

Better Risk Management: Implementing comprehensive security measures allows organizations to identify, assess, and mitigate risks more effectively, enhancing overall risk management strategies.

Enhanced Decision-Making: Secure and private data management ensures that the insights derived from the LLM are accurate and trustworthy, leading to better-informed business decisions.

Competitive Advantage: Organizations that prioritize privacy and security can differentiate themselves from competitors by offering more secure and reliable services, attracting more customers and partners.

Protection Against Intellectual Property Theft: Strong security measures safeguard the LLM and its associated data, protecting intellectual property from theft and unauthorized use.

Improved Collaboration and Innovation: With secure data handling practices, organizations can confidently share data and collaborate with partners, fostering innovation and accelerating development efforts.

Conclusion

Adopting a privacy and security-first approach to safeguarding LLM pipelines is not just crucial—it’s imperative for any organization harnessing the power of these advanced technologies. By proactively identifying and addressing the unique vulnerabilities associated with LLMs, organizations can significantly enhance the security, integrity, and reliability of their applications. Establishing a secure gateway, adhering to secure development practices, maintaining continuous monitoring, and educating users are all vital components in creating a resilient LLM infrastructure.

Prioritizing privacy and security not only protects sensitive data and intellectual property but also builds trust with users and stakeholders. This approach ensures that LLM pipelines are not just functional, but resilient and dependable, enabling organizations to fully realize the transformative potential of their LLM applications while maintaining the highest standards of security and privacy.

Conclusion

Adopting a privacy and security-first approach to safeguarding LLM pipelines is not just crucial—it’s imperative for any organization harnessing the power of these advanced technologies. By proactively identifying and addressing the unique vulnerabilities associated with LLMs, organizations can significantly enhance the security, integrity, and reliability of their applications. Establishing a secure gateway, adhering to secure development practices, maintaining continuous monitoring, and educating users are all vital components in creating a resilient LLM infrastructure.

Prioritizing privacy and security not only protects sensitive data and intellectual property but also builds trust with users and stakeholders. This approach ensures that LLM pipelines are not just functional, but resilient and dependable, enabling organizations to fully realize the transformative potential of their LLM applications while maintaining the highest standards of security and privacy.

See also: